Interact with the full trace (no signup required)

Linux environments are notoriously difficult to manage and secure. Looking at them from the operations side, a single misconfiguration can lead to costly downtime, and locating the problem is time-consuming. Setting up audit logs for Linux machines is challenging enough. Even when set up correctly, however, they’re exceptionally noisy, especially when trying to search through logs across multiple machines over varying time spans. Gaining operational visibility across Linux machines reduces the time it takes to find the root cause of a problem or misconfiguration.

Challenges Tracing Issues in Linux

With Linux running over 90% of the Cloud (and modern Cloud Native stacks) by some estimates, it is the go-to choice for those building and operating modern applications. However, Linux is also complex, making root cause analysis challenging and time-consuming. Tracking who did what when across a set of Linux systems is a non-trivial task.

Lack of coverage

Native to the Linux kernel, auditd offers a way to start tracking operational activities within a Linux system. Linux’s auditd provides transactional information about operating system activities. This is limited. For example, the system’s defaults only include tracking:

- Logins

- Logouts

- Sudo usage

- SELinux-related messages

To get the most from auditd, you need to build customized (and correctly ordered) rules which makes installation time-consuming and error-prone, and contributes to other issues outlined below. Depending on various OS defaults, additional rules will typically need to be added to capture arbitrary commands being executed, files getting touched, etc… – extremely important information when we are trying to root cause a misconfiguration (i.e. who executed this command when and where?)

Hard to Decipher

One example of an auditd log message is shown below – additional sources of information are required to give this context, such as mapping User IDs to user names and groups, process IDs to actual process names, converting from Epoch time and so on.

type=USER_START msg=audit(1638295197.061:502618): pid=26388 uid=0 auid=1000 ses=13107 msg='op=PAM:session_open grantors=pam_keyinit,pam_keyinit,pam_limits,pam_systemd,pam_unix acct="root" exe="/usr/bin/sudo" hostname=? addr=? terminal=/dev/pts/1 res=success'

Lack of container support

With auditd, you can only run one audit daemon at a time. This means that to track container issues, you either use generic rules that provide little visibility or incorporate an additional monitoring system.

High volumes of logs

The high volumes of data that auditd generates lead to two different problems. First, depending on deployment (and the number of rules), systems may run slower with auditd enabled. Second, it increases storage costs. This means that people often choose to disable auditd, making root cause analysis nearly impossible.

Manually “stitching” Activity Across Machines and Time

All of the above has been limited to the consideration of a single system. We are typically dealing with tracing user activity across multiple systems, meaning we have to examine logs from multiple machines to create a picture of the underlying activity. This can involve user changes and privilege escalations, making it extremely difficult to attribute the activity in question to the actual (original) user responsible.

Furthermore, this can be over arbitrarily long timeframes – users pause, take breaks and come back to activities. Real user activity can span hours or even days, giving us a lot of logs to parse through – if we are even retaining the logs for that long.

Spyderbat: Can I just get a picture of the activity, please?

Spyderbat eliminates the time-consuming root cause analysis process for Linux systems. With our visualizations, customers can trace outages and activities to users and actions in minutes instead of days.

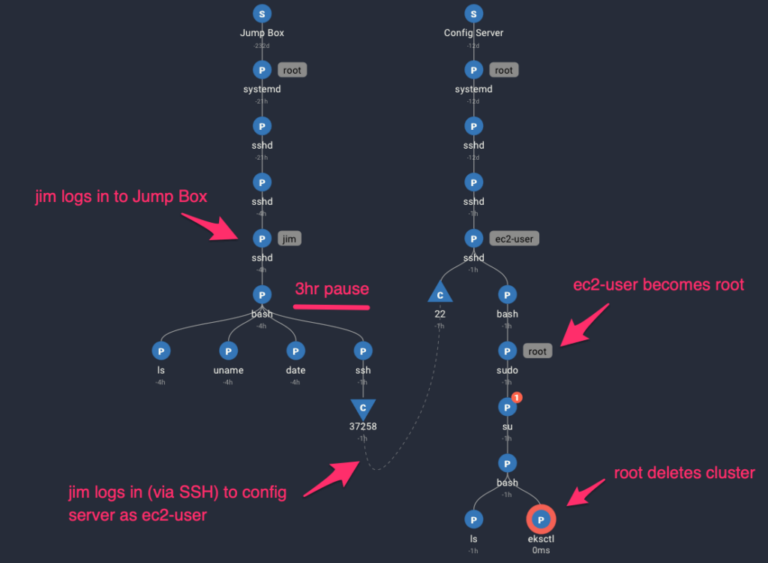

Example A: Trace to User

One customer found that a user had accidentally deleted a Kubernetes cluster(!). With auditd, the process of tracing the change to the user and activity took three days, with manual parsing of logs across multiple systems across engineering and security teams to get to the root cause.

If the suspicious command in question can even be found, auditd may only show for example that root executed a command and possibly the authenticated user (the user that logged into the machine). This information alone is often insufficient to pinpoint the root cause of the misconfiguration (i.e. who was the actual user that entered the command, when did it occur and how did the user get to that point?).

Spyderbat automatically generates a trace that immediately displays from the delete (Cluster) command in question back to:

- Machines impacted

- Users logged into machines

- User privilege escalation/switches to other users

- User activity (actual commands entered etc..)

Spyderbat captures the actual user activity regardless of whether the user logged into a jump host or bastion host, then logged into another machine to make a change. You also see whether they escalated their privileges or logged in as a different user that has the privileges needed so that they could make the change.

Interact with the full trace (no signup required)

In the example above, we can work backward from the actual command that deleted the cluster. The Spydertrace here shows us that it was ultimately Jim that logged into the Jump Box, ran a few commands, and then logged in via SSH to the Config Server. Once logged in, ec2-user (i.e. Jim) elevated privileges to root and then executed the AWS EKS CLI to remove the cluster. We can see the causal trace of Jim logging into one machine, and then performing the activities on the 2nd machine. Furthermore, using relative time across activities on the trace, we can see that the entire trace of activity spanned 4 hours and that Jim paused between commands for around 3 hours on the Jump Box, and waited around an hour between the final 2 commands on the Config Server – making it easy for us to trace activities across both space and time.

Example B: Review Runtime Behavior Across High Volumes of Changes

Many customers don’t make just one change to their environment every day. DevOps, Infrastructure as Code (IaC), Kubernetes, and cloud technologies enable more changes, more rapidly. A small DevOps team might make multiple changes per day (almost 20% of teams according to the latest CNCF survey).

Tracing a particular observed behavior to a specific change becomes overwhelming and burdensome. With Spyderbat’s visualizations, customers can apply the same review of activity and users across all (planned and unplanned) changes, effectively allowing operations teams to compare the desired state of those changes with what’s happening at run time. In short, it allows these teams to quickly compare and validate what should be happening with what is happening.

Operational Visibility for Linux: Investigate with Causation and Context

When it comes to operational visibility, getting systems back up and running as quickly as possible is mission-critical for keeping customers satisfied and ensuring productivity. Log analysis requires guesswork and manual effort, both of which lead to missed details. A common phrase in statistics also true when looking at logs is that “Correlation does not imply causation”.

With Spyderbat, customers get the causal context needed to highlight problems effectively and efficiently. By blending Spyderbat into existing workflows and technologies, you gain a high-fidelity picture that gives you the efficiencies needed to streamline operations and keep business working at the speed of cloud – no log diving required!

Interested in learning more? Register for our webinar

“A Picture is Worth A Thousand Logs: Getting to the Root of Linux Issues”

Please register to view the previously recorded webinar: https://attendee.gotowebinar.com/recording/3541634025407621900

Write a comment